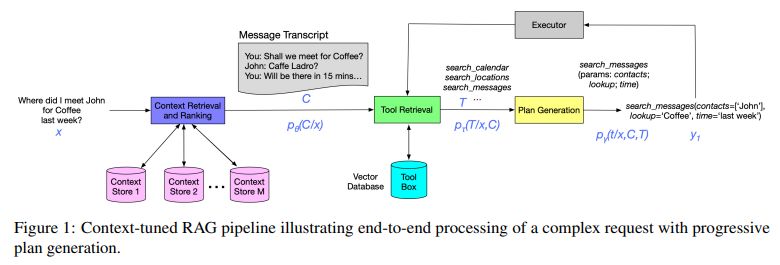

RAG typically consists of three primary components: Tool Retrieval, Plan Generation, and Execution. Existing RAG methodologies rely heavily on semantic search for tool retrieval, but this approach has limitations, especially when queries lack specificity or context. Context Tuning, can be looked at as a viable solution, a component in RAG that precedes tool retrieval, to provide contextual understanding and context seeking abilities to improve tool retrieval and plan generation.

Paper proposes a new lightweight model using Reciprocal Rank Fusion (RRF) with LambdaMART. Results indicate that context tuning significantly enhances semantic search, achieving a 3.5-fold and 1.5-fold improvement in Recall@K for context retrieval and tool retrieval tasks respectively, and resulting in an 11.6% increase in LLM-based planner accuracy. The lightweight model outperforms other methods and helps reduce hallucinations during planning. However, limitations include the absence of conversation history for multi-turn tasks, constraints on planner context window size affecting performance, and the use of synthetic personas instead of real-world data due to privacy concerns.

In summary, Context Tuning enhances RAG showcasing improvements in retrieval, planning accuracy, and hallucination reduction compared to baseline methods.